Lethal Autonomous Weapon Systems (LAWS) represent a revolutionary shift in the landscape of military technology, standing at the intersection of advanced weaponry and artificial intelligence. These systems are characterised by their ability to operate independently, making critical decisions in combat without direct human intervention. This autonomy allows them to select and engage targets based on pre-programmed criteria using sophisticated algorithms and sensor technologies. LAWS mark a significant evolution from traditional weaponry, promising increased efficiency and speed in decision-making on the battlefield but also raising complex ethical and legal questions about the future of warfare. Their development and potential deployment continue to be subjects of intense debate and scrutiny within the global defence community.

What are Lethal Autonomous Weapon Systems (LAWS)?

Lethal Autonomous Weapon Systems (LAWS) are a class of military technologies that can independently search for, identify, and engage targets without human intervention. This autonomy in decision-making and action sets LAWS apart from traditional weapon systems, where human operators are directly involved in critical decision-making.

Key Characteristics of LAWS:

- Autonomous Target Selection: They can autonomously analyse vast amounts of data to identify potential targets based on pre-defined parameters.

- Independent Engagement Capability: Once a target is identified, LAWS can engage it without requiring explicit approval from a human operator.

- Self-sufficiency in Operation: They are designed to function in complex environments, making real-time decisions without remote control.

Technological Components Enabling Autonomy

Artificial Intelligence (AI) forms the brain of LAWS. It enables these systems to process information, make decisions, and learn from experiences. AI algorithms can analyse patterns, recognise targets, and make tactical decisions. Machine Learning (ML) algorithms allow LAWS to improve their decision-making capabilities over time. Learning from past operations and simulations allows these systems to adapt to new situations and enhance their operational effectiveness. Various ML algorithms have been developed to overcome multifarious challenges that an autonomous system needs to overcome. These algorithms are so versatile that they are used in commercial products and military systems.

a) Computer Vision Algorithms (like CNNs):

- Commercial Products: Security cameras with facial recognition (like Nest Cam), autonomous vehicles (like Tesla’s Autopilot), and medical imaging software for diagnostic purposes.

- Weapon Systems: Smart munitions such as precision-guided missiles (e.g. AGM-114 Hellfire Missile) or bombs (e.g. Paveway series of laser-guided bombs) use image recognition to identify and lock onto targets. Advanced surveillance drones also use computer vision for reconnaissance and target identification. Naval radar and missile control, e.g. Chinese 055 destroyer, are equipped with autonomous radar and missile firing systems.

b) Reinforcement Learning:

- Commercial Products: Video game AI (like OpenAI’s Dota 2 bots), robotics (such as those developed by Boston Dynamics), and recommendation systems in streaming services (like Netflix or Spotify).

- Military Applications: Used in simulation-based training systems for military tactics (e.g., advanced flight simulators and the US Marines’ Virtual Battle Space series) and in some autonomous combat vehicles for dynamic decision-making in complex environments.

c) Decision Trees and Random Forests:

- Commercial Products: Credit scoring systems used by financial institutions, customer support chatbots, and email filtering systems (like spam filters in Gmail).

- Weapon Systems: Electronic warfare systems utilise these algorithms for signal classification and threat analysis (e.g. US Navy’s AN/SLQ-32 Electronic Warfare Suite). They can also be used in cyber defence systems for identifying potential threats and anomalies (e.g. Cisco Stealthwatch).

d) Deep Learning and Neural Networks:

- Commercial Products: Voice assistants (like Amazon Alexa and Apple Siri), language translation services (like Google Translate), and image generation tools (such as those in Adobe Photoshop).

- Weapon Systems: Autonomous drones and unmanned combat aerial vehicles (UCAVs) use neural networks for navigation, target tracking, and threat assessment. Deep learning is also used in some missile defence systems for trajectory prediction and interception decisions.

e) Natural Language Processing (NLP):

- Commercial Products: Chatbots for customer service, sentiment analysis tools for social media monitoring, and virtual assistants (like Google Assistant and Microsoft Cortana).

- Military Applications: Used in intelligence gathering to analyse communications and extract valuable information (e.g. The UK’s Government Communications Headquarters). NLP is also employed in command and control systems for processing spoken commands or textual data (e.g., the voice recognition feature of F-35 Lightning II).

f) Pathfinding and Navigation Algorithms (like A* or Dijkstra’s):

- Products: GPS navigation systems (like Google Maps and Waze), route planning software in logistics, and pathfinding in video games.

- Weapon Systems: Used in autonomous ground vehicles for navigating complex terrains and in missile guidance systems to calculate optimal paths to targets while avoiding obstacles.

Types of LAWS Across Different Domains

Air

In the aerial domain, LAWS are primarily represented by drones and Unmanned Aerial Vehicles (UAVs) equipped with sophisticated autonomous targeting systems. These systems can independently conduct surveillance, reconnaissance, and even strike missions.

- Examples:

- MQ-9 Reaper: An autonomous and remotely piloted drone known for its surveillance and targeted strike capabilities.

- Harpy Drone: An autonomous loitering munition system designed to detect and destroy radar emitters.

Land

On land, LAWS manifest as robotic systems that can perform various functions, from surveillance to direct combat roles. These systems are designed to operate in diverse terrains and can be crucial in missions that are too risky for human soldiers.

- Examples:

- Israeli Guardium: An unmanned ground vehicle used for surveillance, reconnaissance, and active patrol.

- Russian Uran-9: A robotic combat ground vehicle equipped with weaponry and sensors for combat roles.

Sea

In maritime environments, LAWS include Autonomous Underwater Vehicles (AUVs) and Unmanned Surface Vehicles (USVs). These systems are pivotal for tasks like mine detection, anti-submarine warfare, and oceanographic data collection, functioning autonomously in challenging undersea conditions.

- Examples:

- Sea Hunter: An autonomous trimaran designed for anti-submarine warfare and mine countermeasures.

- REMUS AUVs: Small, autonomous underwater vehicles used for hydrographic surveys, mine countermeasures, and surveillance.

Each of these systems demonstrates the versatility and adaptability of LAWS across different domains, showing their potential to impact future military strategies and operations significantly.

Historical Use of LAWS

The deployment of LAWS in various conflicts has underscored their growing significance in modern warfare:

a) Libyan Conflict: The Kargu-2, a Turkish-made autonomous drone, was reportedly used in Libya to target soldiers fighting for General Khalifa Haftar[1]. This instance is significant as it possibly represents one of the first known uses of autonomous drones to attack human targets directly.

b) Targeted Elimination of High-Profile Figures:

- Jihadi John: The MQ-9 Reaper drone played a crucial role in the elimination of Mohammed Emwazi, widely known as Jihadi John, a member of ISIS[2]. This operation underscored the precision and effectiveness of LAWS in targeting specific individuals.

- Qasem Soleimani: Another notable use of the MQ-9 Reaper was in the targeted killing of Qasem Soleimani, the commander of the Iranian Quds Force. This action highlighted the strategic use of LAWS in geopolitical power plays and the potential for escalating international tensions.

c) Middle Eastern Conflicts: In regions like Afghanistan, Iraq, and Syria, autonomous drones have been extensively used for surveillance and targeted strikes, particularly in counter-terrorism operations.

d) Israeli Defence Systems: Israel’s use of systems like the Iron Dome and Harpy drones in conflict scenarios has demonstrated the defensive capabilities of LAWS, especially in intercepting incoming threats and targeting enemy infrastructures.

These instances highlight the diverse applications of LAWS in modern conflicts, from targeted strikes against high-profile figures to defensive systems and autonomous attacks on combatants. They also highlight the ethical, legal, and operational challenges of deploying these advanced systems in warfare.

Application Scenarios for LAWS

a) Defensive Operations

- Border Security: Lethal Autonomous Weapon Systems are highly effective in monitoring and securing active borders. They can autonomously identify and neutralise threats or illegal crossings, enhancing border security with minimal risk to human personnel.

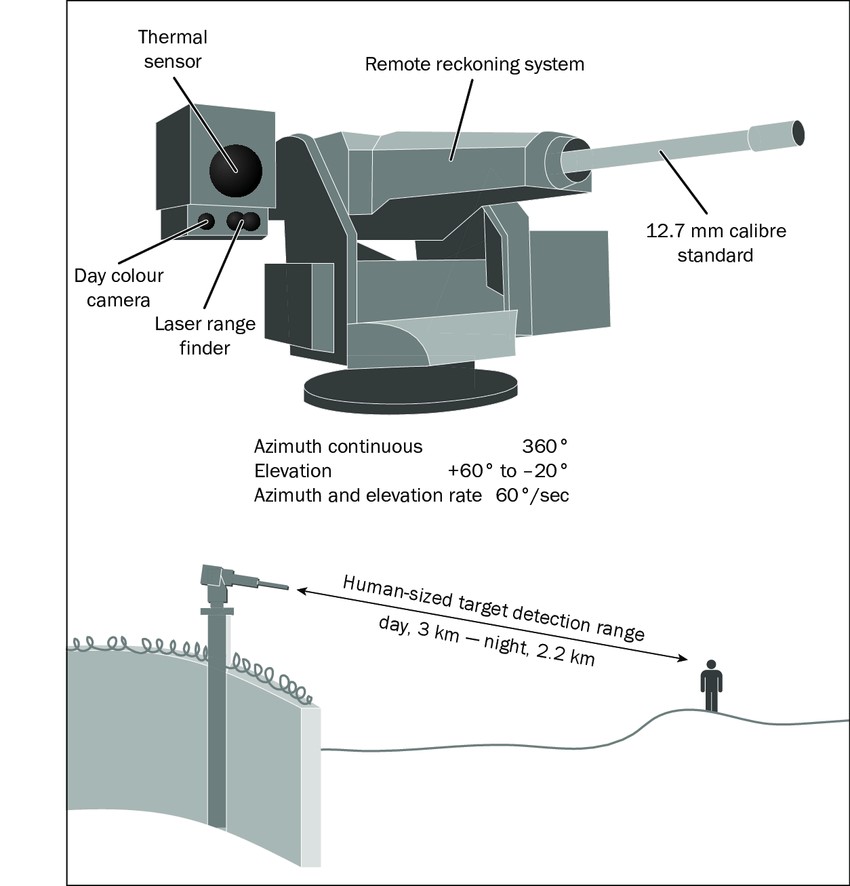

Example: Super aEgis II: This South Korean-made sentry gun system can autonomously detect and engage targets.[3] Equipped with various sensors and weaponry, it can fire both lethal and non-lethal rounds and issue verbal warnings before engaging.

Super aEgis II – South Korean-made sentry gun

b) Offensive Operations

- Targeted Strikes: Precision strikes using autonomous systems like drones are integral for neutralising high-value targets with minimal collateral damage.

- Enemy Infrastructure Sabotage:

- Communication and Supply Line Disruption: Small, autonomous drones or robotic systems can infiltrate enemy territory to disrupt or destroy critical infrastructure like communication networks and supply lines, effectively crippling the enemy’s operational capabilities.

- Destruction of Strategic Assets: These systems can also target energy facilities, military bases, and logistics hubs, causing significant setbacks to enemy forces.

c) Specialised Applications

- Sea Mine Laying by Submarine-Launched Vessels: AUVs can strategically deploy sea mines to protect maritime borders or hinder enemy naval movements.

- Robots in Tunnel and Urban Warfare: LAWS can operate in complex urban environments or tunnel systems, where they can gather intelligence, engage enemy combatants, or conduct sabotage operations.

These scenarios underscore the multifaceted roles of LAWS in modern military strategies.

United Nations Stance on LAWS

The United Nations has engaged in ongoing debates regarding LAWS. Key points include:

- Discussions in UN Forums: The UN has hosted discussions on LAWS, primarily under the Convention on Certain Conventional Weapons (CCW). Central to these debates are the ethical, legal, and humanitarian implications of deploying autonomous weapons capable of making life-or-death decisions.

- No Binding Resolution Yet: There is no binding international treaty or resolution specifically regulating or banning the use of LAWS. However, there have been calls from various member states and advocacy groups for such measures.

- Focus on Human Control: A common theme in these discussions is maintaining meaningful human control over lethal force decisions to ensure compliance with international humanitarian law and ethical principles.

Options for India in the Realm of LAWS

Current Capabilities and Interests

- Existing Developments: India has been actively developing its drone and robotics capabilities, with a focus on both surveillance and combat applications. This includes advancements in UAVs and research into AI for military use.

- Strategic Partnerships: Collaborations with other countries and defence contractors are pivotal in enhancing India’s capabilities in LAWS. There’s an interest in acquiring and co-developing advanced technology from global leaders in defence technology.

Potential Development and Acquisition Strategies

- Indigenous Development: Emphasising self-reliance in defence, India may invest in indigenous research and development programs for LAWS, fostering innovation within the country’s robust technology and defence sectors.

- International Collaborations: Engaging in partnerships for technology transfer and joint development projects with other nations could be a strategic approach to advance capabilities in LAWS quickly.

- Private Sector Involvement: Leveraging the expertise of the Indian private sector and start-ups in AI and robotics can accelerate the development of sophisticated and customised LAWS.

Ethical and Strategic Considerations

- Compliance with International Law: India’s engagement with LAWS will require navigating complex international laws and conventions, ensuring that any development or deployment aligns with legal and ethical standards.

- Geopolitical Context: Given India’s unique security challenges, including border tensions and regional conflicts, LAWS could offer strategic advantages in enhancing border security and counter-terrorism efforts.

- Ethical Frameworks: The development and deployment of LAWS must be underpinned by robust ethical frameworks to address concerns around autonomy in warfare and the potential impact on civilian populations.

In summary, India’s approach to Lethal Autonomous Weapon Systems should blend indigenous development, international collaborations, and ethical considerations, reflecting its strategic security needs and commitment to international norms.

Disclaimer: The views and opinions expressed by the author do not necessarily reflect the views of the Government of India and Defence Research and Studies

Title image courtesy: ICRC

References:

[1] Kyle Hiebert, “Are Lethal Autonomous Weapons Inevitable? It Appears So,” Centre for International Governance Innovation, January 27, 2022, https://www.cigionline.org/articles/are-lethal-autonomous-weapons-inevitable-it-appears-so/. Accessed on 17 Nov 2023.

[2] Ben Quinn, Richard Norton-Taylor, and Alice Ross, “Mohammed Emwazi Killed in Raqqa Strike, Says Rights Group,” The Guardian, November 13, 2015, sec. UK news, https://www.theguardian.com/uk-news/2015/nov/13/jihadi-john-definitely-killed-syria-raqqa-dead. Accessed on November 5, 2023

[3] Vincent Boulanin and Maaike Verbruggen, “Mapping the Development of Autonomy in Weapon Systems” SIPRI, November 2017, https://www.sipri.org/publications/2017/policy-reports/mapping-development-autonomy-weapon-systemshttps://www.sipri.org/publications/2017/policy-reports/mapping-development-autonomy-weapon-systems. Accessed on October 31, 2023